Charlotte man sentenced to 12 years in CSAM case

Marquis Drakeford Bynum, 45, violated his supervised federal release

A Charlotte man was sentenced to 12 years in federal prison for violating his federal release agreement by possessing child sexual abuse material (CSAM) involving a “prepubescent minor.”

According to a press release by the U.S. Attorney's Office for the Western District of North Carolina, 45-year-old Marquis Drakeford Bynum was ordered to serve a “lifetime of supervised release, to register as a sex offender after he is released from prison, and to pay $33,000 in restitution,” by U.S. District Judge Max O. Cogburn Jr.

The press release includes details the case:

“As reflected in court records, this case arose from Bynum’s violation of the terms of his federal supervised release imposed following his 2007 conviction for transporting and possessing CSAM. According to court records, on August 16, 2023, the U.S. Probation Office (USPO) conducted a search of Bynum’s residence pursuant to his probationary terms. During the search, probation officers recovered two cell phones and a flash drive. U.S. Probation and the FBI forensically analyzed the evidence and found thousands of images and videos depicting the sexual abuse of children as young as toddlers. New federal charges were filed against Bynum, and on April 10, 2024, he pleaded guilty to possession of child pornography involving minors under the age of 12.”

In late March 2024, Bynum entered into a plea deal which was accepted in early April of that year. The plea agreement was not accessible in the PACER system.

Bynum's sentencing references violation of his federal parole. In 2010, he was convicted for possessing child pornography and was sentenced to 192 months in prison.

A summary of the 2010 case can be viewed here, which notes Bynum's case involved "5000 photos and 150 videos" of children that were uploaded and downloaded over the span of several years.

The case was brought as part of the US DOJ’s Project Safe Childhood, which was launched in 2006 and is a nationwide effort fighting child sexual exploitation and abuse.

Project Safe Childhood was also involved an the FBI’s “Operation Restore Justice, which resulted in 205 arrests and 115 children rescued.

More To The Story

This is not the first time this site has reported on CSAM-involved cases.

In late April, Prasan Nepal was arrested in North Carolina by the FBI and charged by the US Department of Justice (US DOJ) with various crimes, including CSAM.

The US DOJ identified Nepal as a leader of the group "764,” which is a decentralized, global sextortion network classified as a nihilistic violent extremist network (NVE) and a terror network by the U.S. DOJ. &64 is considered a "tier one" terrorism threat by the FBI.

The identification of 764 is just the tip of the iceberg when it comes to CSAM.

Google’s transparency reporting on these crimes has the tally at over 2.58 million reports of CSAM that Google sent to the National Center for Missing & Exploited Children just between the months of July 2024 and December 2024.

Of those reports for that time period, Google identified 282,584 Google user accounts involved and took “appropriate action.”

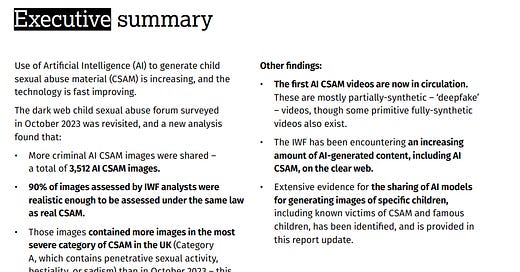

The amount of CSAM is increasing exponentially through AI.

In it’s 2023 report on the topic, the Internet Watch Foundation (IWF) found “20,000 AI-generated images on a dark web forum in one month where more than 3,000 depicted criminal child sexual abuse activities.”

In an updated July 2024 report, IWF found that activity had grown, stating, “AI-generated imagery of child sexual abuse has progressed at such an accelerated rate that the IWF is now seeing the first realistic examples of AI videos depicting the sexual abuse of children.”

“These incredibly realistic deepfake, or partially synthetic, videos of child rape and torture are made by offenders using AI tools that add the face or likeness of a real person or victim,” IWF reported.

The executive summary is below and the full report can be accessed directly through this link.

These images and videos being created by AI can and are drawing from images and photos stolen off of various social media platforms and websites.

These stolen photos and images are typically on a users page and are publicly viewable.

For instance, one posts a school photo of their child on Facebook and the viewing privileges are set to public. Anyone can then download that photo and use it for CSAM purposes.

This ad is over a year old, but it encapsulates the process I just described.